Airplane Thoughts: Computational Irreducibility, Global vs. Global Economics, Pace of Innovation

I’m on a plane for the next 5 hours as I head back to my old stomping ground - Boston. So, naturally, it’s time to keep my brain occupied. Remember, these are literally unvarnished, unedited thoughts so, uh… cut me some slack. Here comes a brain dump and another walk Beyond the Yellow Woods.

It’s hard to know exactly where to start this post as so much has happened over the last few weeks. Some of my posts ended up going somewhat viral (by my standards) which has given me some pause on what to write about.

One of the key ones was around Thermodynamic/Neuromorphic computing. So, let’s start there.

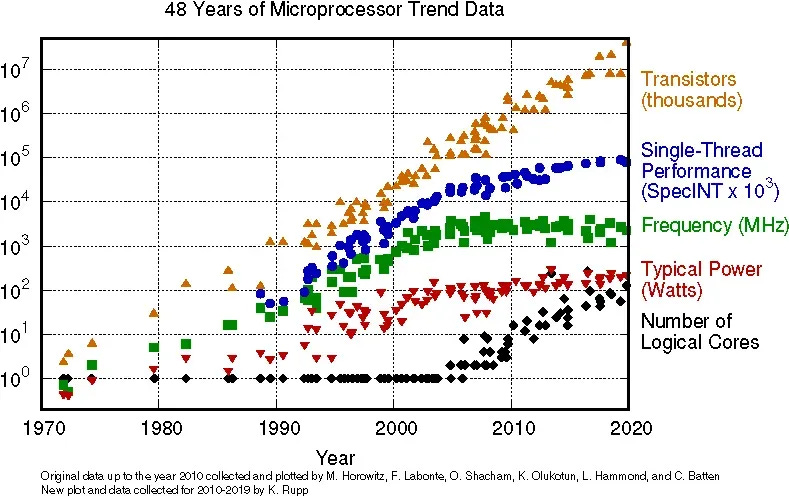

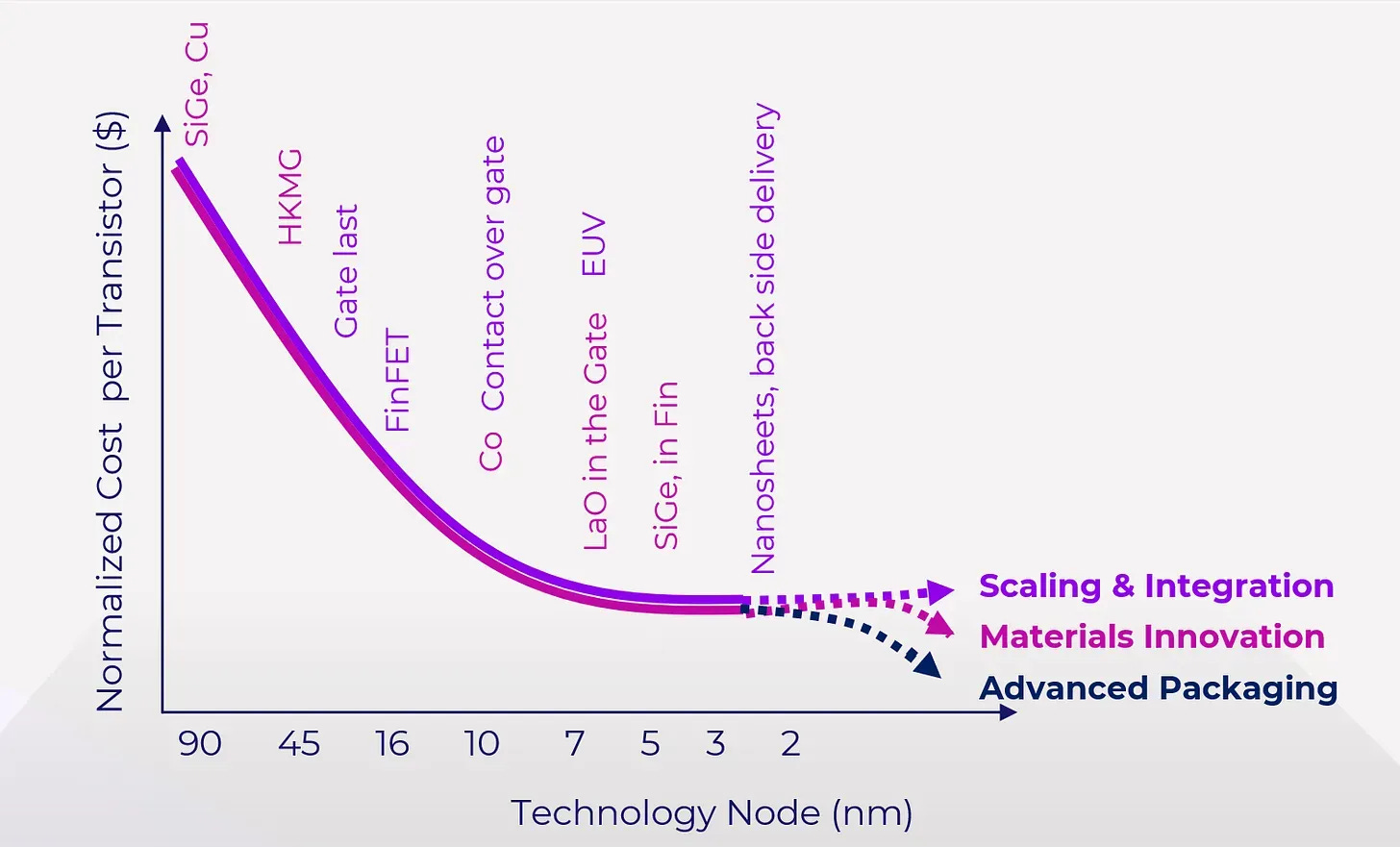

A while back, I wrote an article about how I believe the next evolution of computing was not quantum computing like I had initially thought but rather thermodynamic/neuromorphic computing. I had a brief exchange with Gill Verdon, the founder at Extropic after they released an update on what they are working on. In that exchange, the thing that stands out to me the most that continues to become true is that we are fundamentally constrained within Newtonian physics on how small we can go before we start to get quantum weirdness effects. Gill calls this “Moore’s Wall” and I think that's 100% accurate. I go back to these charts each time

We’re getting significantly diminished returns within the semiconductor industry. Now, the above charts are focused on CPUs that operate on a serial computing basis. GPUs have extended Moore’s Law and, if you know me, I fucking love GPUs. I have since the early, early days of their initial debut onto the market within the gaming scene.

The challenge I’ve got comes down to the fundamental problem of how we compute in the future. I believe we’ll always have a need for things like CPUs and GPUs (CPUs specifically, from a base-level operations perspective). However, the actual alpha from computing is going to come within the transition from deterministic computing to probabilistic.

Here’s the base case why:

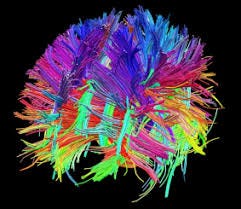

The human brain is a probabilistic model. Neurons actually have different weighted strengths depending on how long they stay “connected” (meaning, how often the neural pathway fires). The aggregate of this creates the connectome of the human brain.

Neural weights can be changed, also known as neuroplasticity. The way they get changed oftentimes comes down to packaged experiences of data. Think of that as folding laundry or riding a bike. It’s a set of activities with certain data inputs, training, and feedback loops towards a reward. Simple Pavlovian behavior in a way.

Another way of thinking about this is pulling in the concept of Memetic Theory. Tactical cultural units of training data that shift the connectome weighting structure. Perhaps not the whole connectome but aspects of it.

Over time, the weights get hardened, and neural plasticity reduces as you age primarily because, as adults, our Memetic packaging of diverse data reduces (aka. we get into the patterns of wake up, work, kids, eat, sleep, repeat).

The point of all of this is 2-fold:

The way we learn specific skills and experiences is through largely discrete and targeted Memetic data sets within a broader topical and topological space

The act of learning is not a binary function but one of probabilistic function, enabling the brain to generate variance limits when interacting with a Memetic data set

I use this example all the time but folding laundry is such an incredibly complex tax. We humans completely underappreciate the sheer complexity of folding a basket of laundry. The parameter space is immense and pushes the limits of computation. Think about it. As a human, on the fly, you have to account for the type of clothing, size of clothing, the material of the clothing, the weight, the folding style, etc. You can go further with things like logos or colors. The act of folding is not one of deterministic properties but rather probabilistic values within a Euclidean space.

Could we solve this problem with deterministic computing? Probably, but it will be very difficult from an efficiency perspective. GPUs could certainly help here but they have poor efficiency at probabilistic values. And that is why I’m so bullish on thermodynamic/neuromorphic computing. It just makes sense and is the future of computation.

When I was at Unsupervised, we talked a lot about exploring unstructured data in non-euclidean space. We went so far as to hire PhDs who specialized in topological data analysis so that we could identify metadata correlations without having a normal Euclidean parameter space boundary. Furthermore, we even hired quantum physicists so that we could push the limits of probability by storing metadata in multiple “perspectives” so that when you “observed” a specific KPI, it would “collapse” into a particular value based on your position within the organization.

We ultimately did not succeed for a variety of reasons. One of the leading ones was the sheer computing cost to do this for each client because we started to hit the limits of computational irreducibility. We’d run into this because we’d feature engineer off of the metadata and expand a single unstructured metadata column into many. So, a dataset of 100 unstructured columns could explode into 1,000,000.

To get around this, we introduced sophisticated manifold learning concepts to generate an environment of higher to lower dimensionality reduction. It’s worth noting at this point that this was based on enterprise proprietary data. I think it would have worked much better with generalized data like OpenAI or Anthropic, but alas, we didn’t have the foresight to switch to the consumer.

If we had access to a probabilistic computing infrastructure, I think the game would be totally different. We had many internal discussions about what mattered to us more: precision or directional accuracy. In my opinion, directional accuracy would have worked better than traditional analytics and, perhaps, if we had access to a probabilistic computing architecture, we could have had our cake and eaten it. But, we were relegated to deterministic computing.

I’ve been staring out the window for a bit thinking about this and I think I’ve said all I need to say. With the advent of the transformer and LLMs, the future is probabilistic. To get to this new future, we must rethink at a fundamental level, the entire stack. By entire stack, I mean all the way down to the instruction set for the processors. The whole thing has to change which requires an entirely new computing paradigm. Nvidia did this with GPUs and their CUDA architecture. We’ll need to do this for the new probabilistic era. This will cost a fortune and be very difficult to pull off, but like Gill & Extropic have pontificated to this point, the risk:reward is worth it because we can get 1,000x or more gains in computing efficiency.

The way I personally look at this risk-to-reward function is how much the computational irreducibility frontier expands. If you think of it as a sort of “big bang” cone, there is only so much within the cone that we can compute because of computational irreducibility. With this new computing paradigm, we can start to compute much more of the range of the cone.

On a totally, totally different subject, I think it’s worth talking about the market for a hot second.

Something I wish we could teach the broader public around was the concept of warping money through the vehicle of interest rates. This is what the Fed does with treasury rates.

It’s actually quite a brilliant strategy when it comes to providing a parameter space around the expansion/contraction of the economy on different time horizons. In simplistic terms, when rates are low currently, it incentivizes spending in the now so that the future has a higher prospective outcome (GDP, wage increase, etc.). When rates are high, it means we want to push for a more conservative economy with a higher prospectus for investment in the future.

I’m not a big fan of the Federal Reserve but I do get the why of its existence. The challenge we’ve got in the USA is that the general population doesn’t realize that we are the global reserve currency - whether we like it or not. We are running into Triffin’s Paradox where the domestic needs and the international needs of our currency, the USD, are at odds with each other.

It’s our central bank versus everyone else’s. Except that, it’s less of a “us vs. them” and more of a “we’re the quarterback and we need everyone on the field to play the right play or else we lose”. That’s what happens when >70% of global transactions are settled in the USD. Unfortunately, I spend a lot of time worrying about the protectionist/nationalist nature of our republican regime versus the globalist/whatever-the-fuck-gets-votes regime of the Left.

Both parties fundamentally suck right now, although I certainly lean more right at the moment. Why? Because they have hilariously become the party of progress from a human flourishing perspective.

I don’t think the general population realizes how much further we could be if we just unburdened the population from unnecessary red tape. We would not be where we are if we had significant red tape all the way through the last century. However, it’s clear that we created our own demise since the 70s. Think about it… we no longer have prolific nuclear energy growth or supersonic flight. The path that they were on had the potential to create dramatic deflationary pricing that benefited consumers.

That’s 50 years. Since 2008, you’ve had an iPhone with Google Maps that can navigate you virtually anywhere in the world, meet with anyone over Facetime, and order virtually anything you’d want from anywhere on the globe with <48 hours delivery.

How in the absolute living fuck do we not have air travel that goes faster than 500mph? Why are we even talking about the cost of electricity in the form of kWh??

Seriously. In the last 15 years, we’ve had such an insane amount of innovation in just the internet technology sector. The space race is kicking off another element of this. We dramatically underestimated what we could do in the internet sector. Did you ever think you could order something online and get it the same day?

Are we just dramatically underestimating what other sectors could be doing based on what we’ve been told? Are we just optimizing for the local minimum of the memetic dataset that the prior generation has installed? (my opinion? yes, we are).

Like Peter Thiel famously said, “We were promised flying cars and instead got 140 characters”. We should have progressed much further than we have and we have, unfortunately, become our own worst enemy.

Part of me thinks that the old vision of FDR’s global vision for Four Freedoms needs to be rewed and refreshed in the age of Globalism. For what it’s worth, I don’t subscribe to the concept of hypernationalism. It’s a point I disagree with Republicans. When they say stuff like “We should make toasters in the USA!” my immediate thought is “FFS, I don’t want my toaster to cost $185 and like it costing $15”. There’s a world where we could go back to total isolationism, and in that world, everything costs 10x more, there are 10x fewer options, and we don’t get the pressured dynamism or innovation that globalism provides.

However.

And that’s a big however. We must not sacrifice ourselves. And when I say “ourselves” I mean the whole globe.

This is where I think Boomer thinking and Millenial thinking differ. Boomers grew up in a time when we were emerging as the world leader. Millennials are growing up in a time when we are the global leader. That’s a massive difference. Boomers didn’t need to compete globally in the same way or manner that millennials do. When Ohio elects a state rep, it honestly is not just Ohio electing that individual but rather Vietnam, Taiwan, India, etc., that are electing them. Yeah, it’s a problem but it’s a problem that also cannot be solved by simply electing someone who just ignores the international demands. Why? Because if you ignore those demands, you hollow out the core of the local economy (eg. manufacturing jobs).

In my opinion, the only way that we could get around that is if we make a significant leap in robotics in the next 10 years so that we can reduce labor costs to that of an emerging economy/country. That is a big ask. We have a slim shot of achieving that but man, a lot has to go right between now and then to get there.

I’ve written less than I expected on this flight but hey, that’s how it goes sometimes.

I’m landing in Boston in 20 minutes. I hope this rambling read was interesting to some of you. It’s a constant learning game within the arena and the only way to progress is to risk your opinions in the open market. In that vein, give me your feedback. What are your thoughts on all of this?

Cheers,

Ryan